Container-based infrastructures are among the hottest cloud computing solutions today. What’s driving the excitement is the ability of containers to make application deployments more manageable, versionable, and faster. That’s why it’s so important to have the most optimal Docker images.

Here at nClouds, DevOps is a major focus, and we build CI/CD pipelines for many customers. We make heavy use of Docker containers to easily move containers between different environments (e.g., prod, dev, stage).

Are you currently faced with the challenge of Docker images sized from 600MB to 1G? Is each instruction in the Dockerfile adding a layer to the image? Do you need to maintain two Dockerfiles?

If so, you are faced with some common issues:

Slower speed for developers

When developers are developing locally, they are normally fetching the Docker image. This ends up consuming more time, though Docker does a good job of allowing you to fetch the latest images. But still, the large base image means reduced productivity for developers.

Unnecessary bandwidth cost

If you are deploying the Docker image across thousands of servers, large image size causes unnecessary bandwidth cost.

Performance and speed

In addition to cost, often customers run into performance and timeout-related issues when the image size is large.

Disk issues

Depending on the environment you are using to deploy the Docker containers, many times you have constraints on disk size, so it’s important to reduce the image size.

Security holes

Large container images have increased potential for security holes. Smaller containers usually have a smaller attack surface as compared to containers that use large base images.

Bottom line, try to reduce Docker image size today. But before we talk about how to reduce the image size, I think it’s worth understanding why the Docker image size grows.

Docker is like a version control system. Each change creates a new layer. Any time you run a new command in Docker, it creates a new layer. That’s why in Dockerfiles you see multiple commands chained. The stage below represents one layer:

RUN curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py \

&& python get-pip.py \

&& pip install awscli

So, let’s imagine that your container requires you to download source files, and you build the container (common in statically compiled language). You must remove the source file from the containers or else the source will be part of the image, increasing the size. And, you can’t do rm -rf $source_files_directory because it will simply create a new layer.

How can you fix this issue? We’ll show you the old way and a better way.

Old way: Builder pattern

Are you currently using the builder pattern? If so, you’re using two Docker images:

- One image to perform a build.

- One slimmed-down image to ship the results of the first build without the penalty of the build-chain and tooling in the first image.

You may have found that the only way to keep the layers small is to:

- Clean-up artifacts no longer needed before moving on to the next layer.

- Use shell tricks and other logic to keep the layers as small as possible while being sure that each layer has only the artifacts it needs from the prior layer.

For example, here is a common solution using the builder pattern, to bring up a Tomcat container and deploy an application on it:

FROM tomcat:9.0.10-jre8

COPY . /usr/src/project

WORKDIR /usr/src/project

RUN apt-get update \

&& apt-get install -y openjdk-8-jdk \

&& wget https://downloads.gradle.org/distributions/gradle-4.8.1-bin.zip \

&& unzip gradle-4.8.1-bin.zip \

&& PATH=$PATH:$PWD/gradle-4.8.1/bin \

&& gradle prod \

&& mv /usr/src/project/build/ROOT.war /usr/local/tomcat/webapps/ROOT.war

COPY entrypoint.sh /

COPY tomcat_conf_prod/* /usr/local/tomcat/conf/

RUN chmod +x /entrypoint.sh

First of all, we have a COPY instruction to add our current code to the container. Then we need to install `openjdk` and `grande` to compile it and build the `ROOT.war`. After that, we move our `ROOT.war` file into the Tomcat directory and we make some final configurations to prepare and start our container with an entrypoint.sh script.

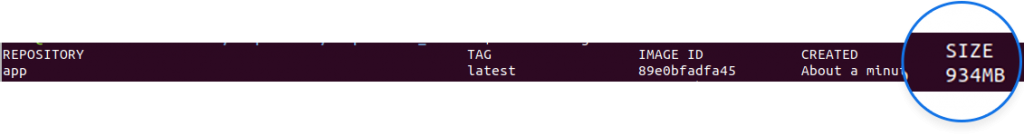

Here is the final result:

The container itself looks pretty good, but the size of that image (934MB) is large.

A better way: Docker Multi-Stage

Docker Multi-Stage, available since Docker version 17.05 (October 2017), will reduce the final size of the container by dropping libraries, dependencies, packages, etc. that aren’t needed anymore. The process consists of:

- Separating the build into different stages, just keeping the final result of each stage and moving it to the next one.

- Using multiple FROM statements in your Dockerfile. Each FROM instruction can use a different base, and each of them begins a new stage of the build.

- Selectively copying artifacts from one stage to another.

We can transform the last Dockerfile snippet into the next one:

FROM openjdk:8

COPY . /usr/src/project

WORKDIR /usr/src/project

RUN wget https://downloads.gradle.org/distributions/gradle-4.8.1-bin.zip \

&& unzip gradle-4.8.1-bin.zip \

&& PATH=$PATH:$PWD/gradle-4.8.1/bin \

&& gradle prod

FROM tomcat:9.0.10-jre8

COPY --from=0 /usr/src/project/build/ROOT.war /usr/local/tomcat/webapps/ROOT.war

COPY entrypoint.sh /

COPY tomcat_conf_prod/* /usr/local/tomcat/conf/

RUN chmod +x /entrypoint.sh

How does Docker Multi-Stage compare with using the Builder Pattern?

The main difference is that, with Docker Multi-Stage, we build two different images in the same Dockerfile. The first one is based on `openjdk` and we use it to compile our code and generate the `ROOT.war` file. The magic occurs when we declare the second image, which is based on Tomcat, and we use the `--from=0` instruction to copy the `ROOT.war` from our first image to the second one. Doing that, we are dropping all the dependencies that Gradle (build tool) used to compile our app and are just keeping the most important thing, our `ROOT.war` file.

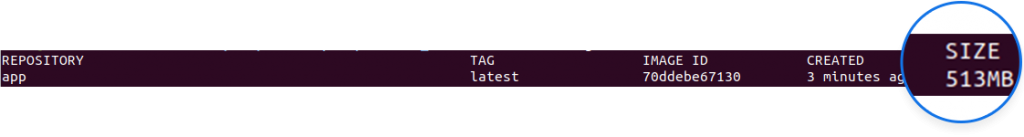

The final size of this image is 513MB. which means that we reduced it by 45% by including only the important artifacts of our first container, leaving behind all the dependencies that we used to build it.

Additional benefits include:

- Better caching

- Shorter build and deploy times

- Reduced duplicate code

- Smaller security footprint

- Reduced amount of space used on the Docker host and artifact repository

Reference source: https://docs.docker.com/develop/develop-images/multistage-build/

We are hoping this information is valuable to you. Please comment — and let us know other how-to’s you’d like us to address in future blog posts.